HTTP Server from Scratch

In order to further my understanding of the HTTP protocol and network programming, I’ve developed an HTTP 1.0 server from scratch.

I wrote the server in Python and limited myself to only using modules from the standard library.

Here is a link to the source code on github.

Network Sockets

Network sockets are used to define accessable points of entry to a software service on a network. They are typically identified by a combination of the transport protocol, IP address, and port number.

Transport protocol refers to the methods used during layer 4 of the OSI model. They are used to control the volume of data, where it is sent, and at what rate. Typical transport protocols include TCP, UDP, DCCP, and many more. The most ubiqutous of these transport protocols is TCP.

Why TCP? TCP is typically chosen for web applications because it offers a reliable flow of communication between two sockets. Other protocols sacrifice this reliability for speed and efficiency when reliability is less important.

The IP Address (e.g. 192.168.1.1) is a way to identify a unique device on a computer network that uses the Internet Protocol for communication.

The port number (e.g. 80, 443) allows us to specify which application should receive the incoming network traffic since one IP address can hosts many different applications.

Now that we understand these three building blocks, we can proceed and build a socket.

import socket

PORT = 8080

HOST = "localhost"

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

sock.bind((HOST, PORT))

sock.listen(5)

As you can see we start by specifying two constants, PORT and HOST. These are the port number and IP address components discussed above, respectively.

We then initialize our socket instance, we pass in two positional parameters, socket.AF_INET and socket.SOCK_STREAM. socket.AF_INET refers to the address family that is used by the socket to designate the type of addresses it can communicate with (in this case IPV4). socket.SOCK_STREAM specifies TCP as our transport protocol.

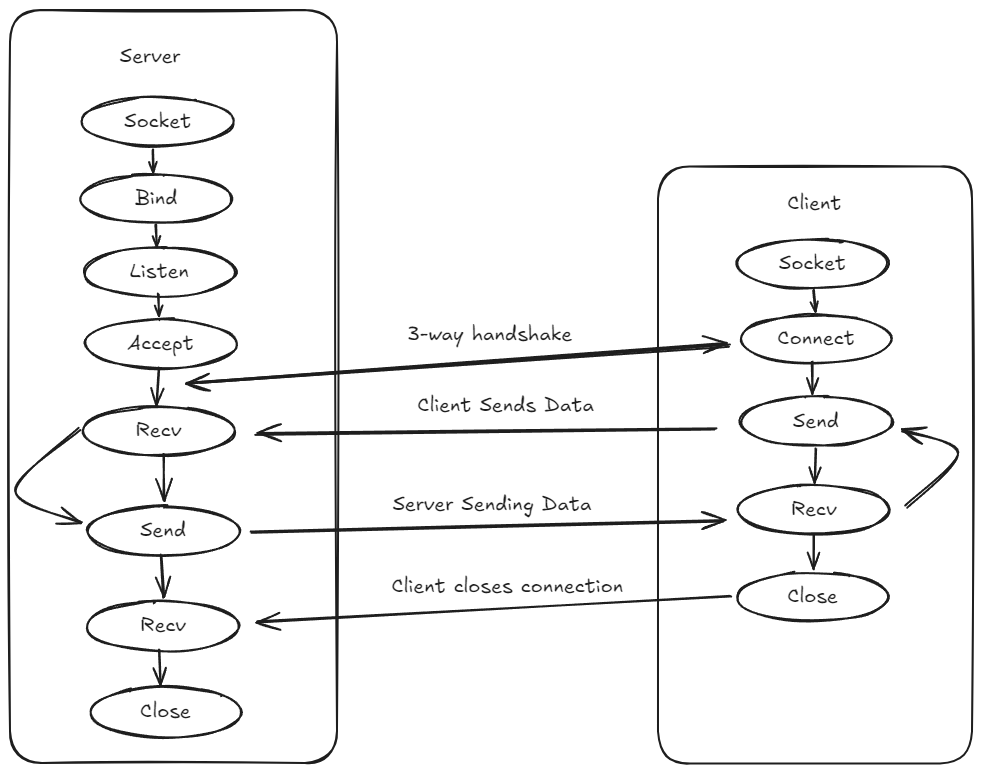

In order to understand the next two lines, we need to understand the API of a socket.

bind - associates the socket with its local address

listen - marks the socket as a passive socket, meaning it will be used to accept incoming connection requests using accept.

accept - pulls connection requests off a queue, creates a new connected socket, and returns that new socket.

connect - establishes a connection between two sockets

send - transmits a message to another socket

recv - receives messages from a socket.

close - closes the socket’s file descriptor

Here is a diagram that illustrates the flow of these API operations,

The server side of this diagram is the one that we are focused on building out with for this project.

Once the server socket is established, it will continuously accept incoming requests and initiate new sockets to handle further communication.

HTTP

I’ll start by saying that if you want to learn HTTP in depth, then you should read the official RFC for it. It is not very easy reading and beginners should seek a more general overview of the protocol, but it contains all of the specifications that someone building an HTTP server would be interested in.

Rather than going in-depth about any of the RFC’s sections, I will provide some key concepts of the HTTP protocol.

- It is an application layer protocol

- It is request/response based

- Messages follow a defined structure

Application Layer Protocol

An application layer protocol are rules that dictate how service-to-service communication should be handled. The application layer is not concerned with how the host-to-host connection is established but rather how the two can communicate.

A loose analogy you can think of is when two humans who know multiple languages start communicating. They are not going to say every other word in a different language, but rather settle on an agreed language for the duration of the conversation.

One thing to keep in mind is that either human can break that agreement and start speaking in another language, this applies to computers as well. A protocol is an agreed upon standard, if one party fails to hold up their end of the agreement, then things can get messy. The potential failure to uphold the protocol is part of what makes an HTTP server difficult to implement, what do you do when your connected socket doesn’t follow the protocol?

Request/Response Based

HTTP is a request/response based protocol. This means there are two different types of messages, requests and responses.

Requests are sent by the client to trigger some action by the server.

Responses are sent from the server to the client in response to the request.

Let’s think about a typical HTTP exchange,

- Client tries to connect to the server

- Server socket accepts the connection and designates a new socket for communication

- Client sends a request to the new socket

- The new socket reads the request, and processes it accordingly

- The new socket returns a response

- The new socket closes the connection (NOTE: This is HTTP 1.0 specific)

Messages follow a defined structure

In the process of creating an HTTP server you’ll realize that you need to pull an arbitrary amount of bytes off of the network queue and parse it for meaningful data.

HTTP messages contain three important components:

- Request line

- Headers (optional)

- Entity (optional)

The request line is a mandatory field that provides the following components:

- Method (e.g. GET, POST, HEAD)

- URI

- Version number

Each of these components are seperated by a space, and the end of the request line is marked by a CRLF (\r\n).

Headers are a way for the client and server to pass meaningful metadata across the request/response lifecycle. An example of an HTTP header is the content-type header, this header is used to indicate the original media type of a resource before any content encoding is applied. (e.g. image/gif, image/png/, application/pdf)

This header is useful because it tells applications how they can render the data and make it meaningful. You don’t always know the type of encoding the server or clients message may contain and therefore you must attempt to process it with the help of the content-type field (and others).

Headers are seperate by CRLFs.

Lastly, an entity is composed of entity-header fields and an entity-body. Entity-header fields are fields that describe the entity-body, which is a piece of content that can be included in a HTTP message.

A few examples of entity-header fields are:

- Content-encoding

- Content-type

- Content-location

- Content-length

These help describe the entity-body which is present in the response or request following the headers. There will be an extra CRLF that follows the last header before you meet the entity-body. If there is no entity in the message, the message ends with two CRLFs, one after the last header and the ending CRLF.

How do you know when to stop processing the entity body? You process the number of bytes defined by the content-length header.

Working with Multiple Clients

Powerful web servers like nginx and apache are able to handle thousands of concurrent clients at a time. This is necessary due to the high velocity of traffic that some web applications receive on a regular basis.

There’s many different models to handle this load, but most of them revolve around these concepts:

- Multi-threading

- Multi-processing

- Asynchronous I/O

- Synchronous I/O Multiplexing

The last option, Synchronous I/O Multiplexing, is a bit old school but I will be using it in my HTTP server for the sake of learning.

What is Synchronous I/O Multiplexing?

Let’s say you want to listen for incoming connections while still reading from existing connections, you might think you can just use accept and recv to continously service new connections, but there is a drawback.

accept is a blocking call, it will halt the current process until a new connection is ready to be accepted.

So then we can use non-blocking sockets? Not exactly, non-blocking sockets are great but they tend to eat up CPU time polling for new traffic. Polling for new traffic occurs because for each active socket connection, we need to call recv or send to determine if we can communicate with the socket.

Instead, we can use I/O multiplexing to effectively wait on multiple socket signals at the same time. The traditional unix system call that allows us to “subscribe” to multiple socket notifications is called select.

It’s important to note that select alone is often not enough to be a powerful web server, we are still handling requests in one thread and in a sequential manner. Processing a request that takes some time to complete (large file read, CPU intensive process, etc) will still block other requests from being processed. Select can be used alongside multi-threading/multi-processing/async to allow the managing of multiple requests concurrently.

Python gives us two standard libraries to interact with the select system call, select and selectors, with the former being a low-level interface and the latter being a high-level interface.

I chose to use the low-level interface in my project.

Companion (My HTTP Server)

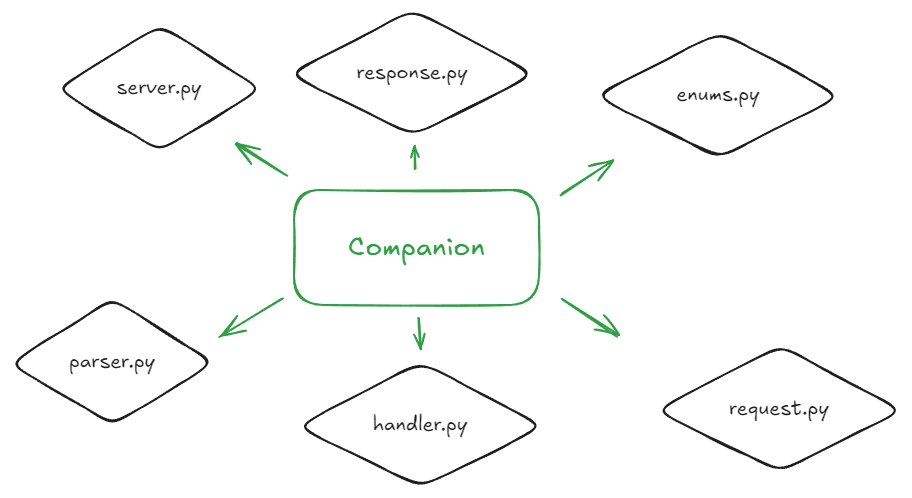

Here is how I chose to structure my HTTP server,

High-level overview of the modules:

server - defines the main HttpServer class, this exposes a run method which starts the server socket and serves new connections.

enums - defines common enumerations used to represent HTTP status codes, etc

response - defines an HttpResponse class which is used to encapsulate all the details of an HTTP Response

request - exposes an HttpRequest class that encapsulates the

parser - handles the parsing of raw bytes into a HttpRequest

handler - exposes an HttpHandler for dealing with specific HTTP methods (GET, HEAD, etc)

Now, there is a lot more work to be done in each of these modules for it be a concrete HTTP server used in a production environment.

Here are some of the things that I glossed over (for the time being):

- Handling all HTTP methods (I only implemented functionality for GET and HEAD)

- Every security concern (people are clever and have found many ways to break an HTTP server, a proper HTTP server needs to spend a lot of time implementing defenses)

- Max performance (request are still being handled in a synchronous manner, multiprocessing/thread/async could make this faster. )

- Parser robustness (there is still some work to do in the parser before it can handle all edge cases within HTTP request formatting)

- A more customizable logging solution

That’s quite a lengthy list, and for good reason. Web servers are complex pieces of software that demand lots of effort and committment. Making a robust and secure web server shows understanding of several computer concepts, including concurrency, data structures, algorithms, operating systems, and more (depending on how deep your rabbit hole goes).

With that in mind, here are some concepts that I feel my toy web server helped me improve upon,

- Better understanding of the HTTP protocol

- Multiplexing of Synchronous I/O and the

Selectsystem call - Network programming and sockets (blocking vs non-blocking)

If you’re interested in learning about those concepts, then maybe you’ll consider developing your own web server.

Thank you for reading!

Enjoy Reading This Article?

Here are some more articles you might like to read next: